Tips for Dealing With Errors

People always ask how to become a top engineer. One easy trick is to check your production error tracking weekly and fix a couple of errors every week.

Engaging with your production error tracker is a valuable learning opportunity. It's a chance to understand how to design systems with fewer errors and develop a sense of ownership.

In the next few posts, I will cover crucial tips and tricks for dealing with run-time exceptions and errors. These insights will be invaluable in your journey to becoming a top engineer.

Today, I’m going to cover tips about

So, lets get started 👉

👀 Monitoring

The tool I have used the most for error reporting is Sentry. It is one of the first tools I add to every new project I start.

I often use multiple projects for the same product. For example, at Product Hunt, we had projects for:

[JS] Next.js frontend

[Node] Next.js ssr server

[Ruby] Ruby on Rails private API and admin

[Ruby] Ruby on Rails public API

[Ruby] Sidekiq background workers

Even though all [Ruby] projects used the same codebase, they had very different usage patterns and error frequencies.

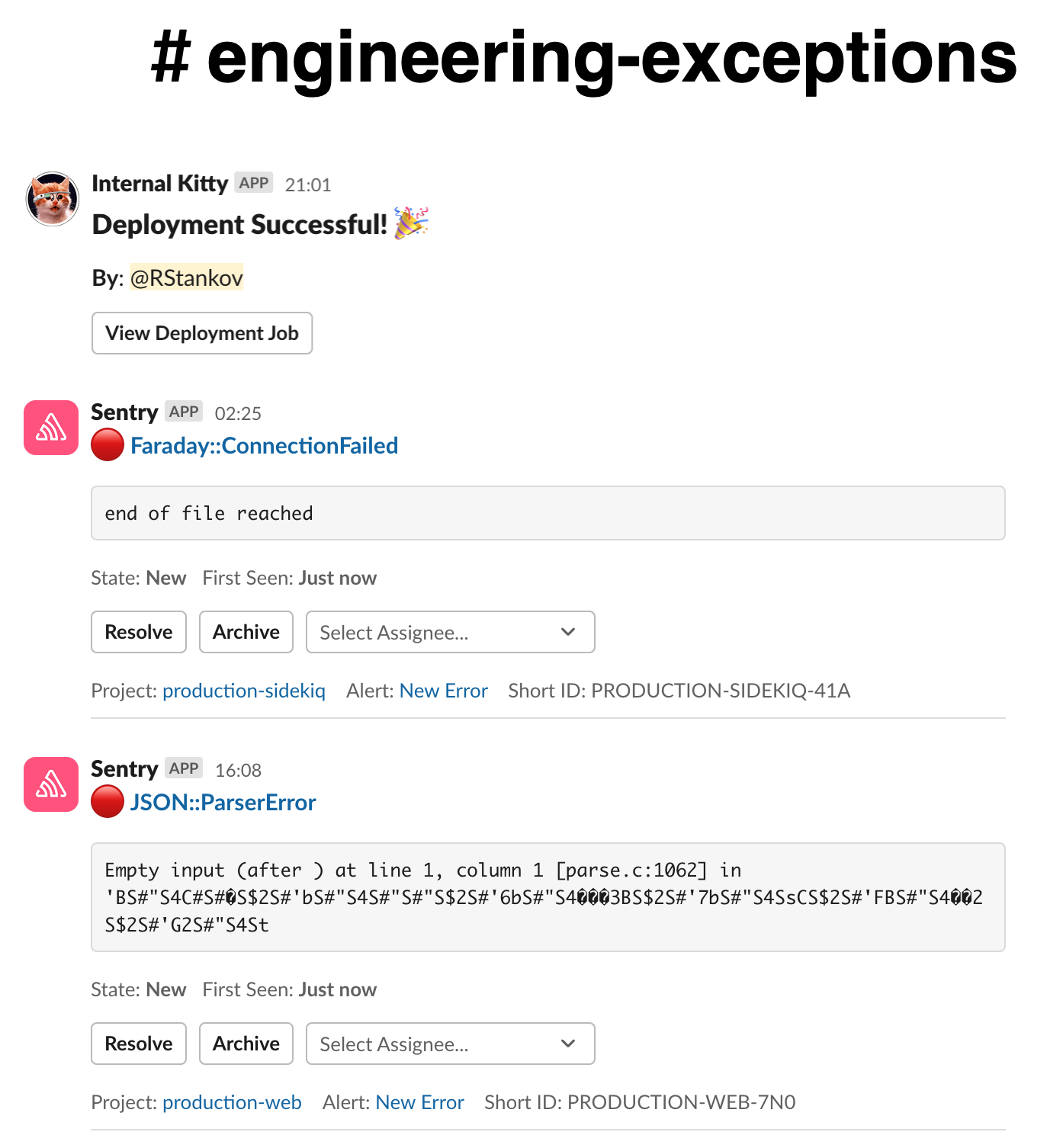

Create a dedicated Slack channel where every new error is logged. Log all deployments in this channel, as well to correlate them with any spikes in errors, making it easier to identify and address issues stemming from recent changes.

This channel is a good place to triage the errors and assign them to teammates. Each message can be used as a thread for this particular issue.

I turn off their email notifications for new errors because if it is serious, it is visible in Slack.

I keep their “Weekly Report,” which is a good overview of the week.

💡 The key here is that you or your team shouldn’t forget to check errors. Sentry should notify you.

🔇 Reducing the noise

When you start monitoring, the worst that can happen is for people to start ignoring it. This happens because there are too many errors, especially too many unactionable errors. Or, as I like to say, there is “too much noise”.

So, after you have your monitoring, the next step is to reduce the noise.

You can start reducing noise by muting errors that can’t be acted upon. Errors like routing errors or errors that containers are shutting down gracefully.

Here is an example in Ruby on Rails application:

Sentry.configure do |config|

config.excluded_exceptions = [

'Rack::Timeout::RequestExpiryError', // timeout

'Rack::Timeout::RequestTimeoutException', // timeout

'ActionController::RoutingError', // 404

'ActionDispatch::ParamsParser::ParseError', // malformed request

'Sidekiq::Shutdown', // graceful process stop

]

endYou should be very careful here because you don’t want to start ignoring everything.

If you are too overwhelmed and know certain parts of your systems get too many errors and you can’t deal with them, temporarily create a Sentry project that acts as a “junk drawer” and redirect errors there. When you clean the main project, you can remove the redirect.

🔨 Fixing Errors

What should you fix first if you have a lot of errors? My prioritization framework is as follows:

🔥 Critical errors - ones that cause major damage

😇 Most easy and noisy

Those "critical errors" are often handled immediately because they bring the whole system down or are very complex and scheduled as part of the regular execution.

Once the critical errors are under control, the focus shifts to the non-critical ones. The goal here is to reduce noise, so it's best to start with the easiest one (and possibly the most frequent). In this case, quantity matters more than severity.

This approach ensures that the most disruptive issues are resolved first, followed by those that contribute significantly to the overall error count

When I don't have a solution for fixing a non-critical error within 20 minutes, I move on to the next error.

💡 Fixing errors means fixing errors, not just silencing them by try-catching and ignoring them.

Sometimes, you won't know how to fix an error, but you won't want the user to get a 500 error. In this case, you can catch the error, log it in Sentry (so you are reminded about the core issue), and return a proper error message.

You want to fix the root causes. Here is an example: the following code throws an error because “account. subscription” is null.

account.subscription.statusAccording to your business rules, all accounts should have subscriptions. The “bad” fix would be to check for null because this will hide the issue and make it harder to fix later.

What you should do here:

Check for other accounts without a subscription

Find out why those accounts don't have a subscription

Fix this

Add missing subscriptions to accounts

💡 Errors are valuable information about your system, don’t ignore them.

When you start fixing errors in bulk, you will start noticing patterns, leading you to build more resilient code and improve your internal tooling.

An example is when I noticed many of our background jobs were failing with random network errors, and after a couple of retries, they succeeded. So, I made our background job automatically catch network errors, reschedule, and only log the error in Sentry if the job failed more than 30 times.

📋 Processes to deal with errors

Here are some company processes I have used to deal with errors. From a management point of view, you want to have a process around errors. Otherwise, it is no-man's land.

🍸 Happy Friday

This is a process I’m currently using as a solo developer at Angry Building. Every Friday, I dedicate time specifically to fixing bugs and addressing errors, ensuring that the system remains stable and any outstanding issues are resolved before the weekend.

We used this process in the early days at Product Hunt before we reached 7-8 engineers.

A developer has dedicated time on Friday to deal with

🕷 Fix bugs

💥 Fix exceptions

🔧 Bump dependancies

💸 Pay technical dept

⚔️ Strike Team

As Product Hunt, it became too chaotic to have this. We have had a dedicated “Strike team” consisting of 2 junior developers for some time. They were responsible for Fixing bugs and exceptions, bumping dependencies, and working with our support team.

Of course, they didn't fix every error; if a recent feature caused errors, the team who worked on it fixed it. Or if the error was too complex, a more senior person paired with them.

🐞 Bug Duty

However, when the team crew at Product Hunt increased to 12+ people, and the "Strike Team" engineers became more senior, we disbanded the team and introduced a "Bug Duty".

An engineer was rotated into this role in every sprint. I have written before about this process 👉 in my blog.

I think this worked a lot better than the dedicated "Strike team".

🐛 Bug Bash

All previous tips in the post apply when you have a relatively small number of errors. However, if you are flooded, you can try to do "Bug Bash," which is exclusively focused on fixing exceptions.

For 1 sprint, most of the team is focused only on fixing exceptions. I suggest making it fun by giving prices and titles like "toughest error", "most errors fixed", and "smartest workaround". 🏆

One couple of gotchas about this

Good coordination is needed because multiple people might try to fix the same issue, or one can claim it and then abandon it without releasing it. You can have a Trello board with cards for each error and a limit on "In Progress" so that one developer can only have an error there.

A lot of the fixes might be hiding root cause issues by ignoring errors.

You need to have proper error setup and followup process otherwise in month or two you will be flooded again

Conclusion

One sign of a stable system without errors. I took a lot of care to have my system running without errors.

Handling errors is a huge topic, and because of this, I’m splitting it into a couple of posts.

General tips (this post)

Ruby on Rails tips

JavaScript tips

If you have any tips, you strongly agree or disagree with them or have ideas for something I missed, уou can ping me on Threads, LinkedIn, Mastodon, Twitter, or just leave a comment below 📭